I’ve noticed a peculiar pattern – or more accurately, non-pattern – in all my studies of usable security. At every step of adoption, people exhibit resistance to adopting cybersecurity measures (such as installing password managers or creating unique passwords for each online account). I expected to see a lot of resistant attitudes in people who do not adopt security practices, or those who only adopt security practices if mandated to do so. But resistance is also high among research participants who have voluntarily adopted security practices and who seem very engaged with cybersecurity overall.

As it happens, I have already developed a measure of security resistance that can help in these studies. Take the average of participants’ Likert-type survey ratings on these four items (1=Strongly Disagree to 5=Strongly Agree) [handout]:

- I am too busy to put in the effort needed to change my security behaviors.

- I have much bigger problems than my risk of a security breach.

- There are good reasons why I do not take the necessary steps to keep my online data and accounts safe.

- I usually will not use security measures if they are inconvenient.

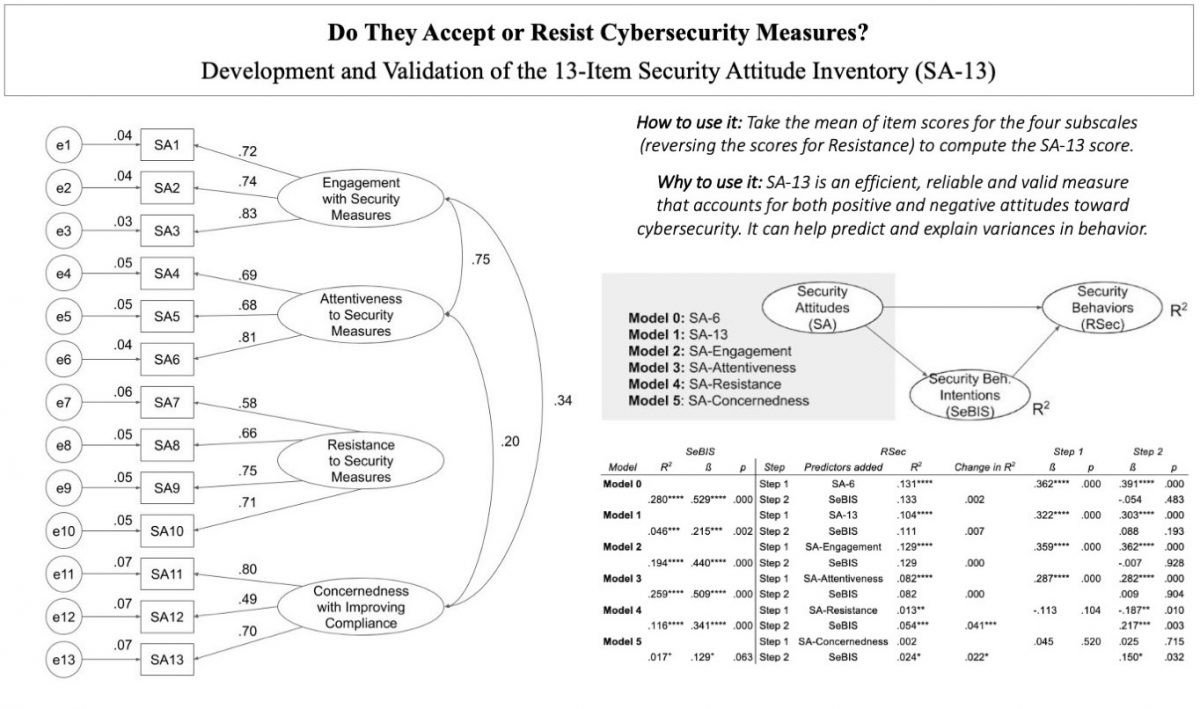

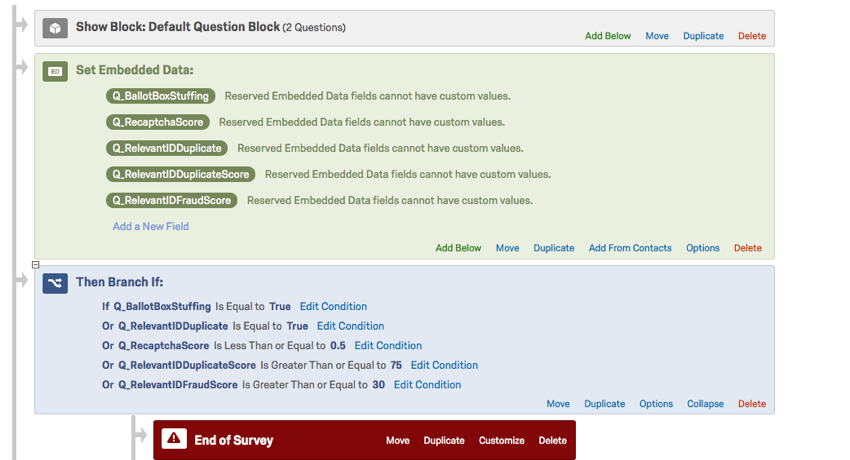

So far, I have found that resistance alone is not a reliable differentiator of someone’s level of cybersecurity adoption. For example, in my working paper describing the development and validation of the SA-13 security attitude inventory, I find that my SA-Resistance scale (the one described above) is significantly negatively associated with self-reported Recalled Security Actions (RSec), but also significantly positively associated with Security Behavior Intentions (SeBIS). By contrast, in a more recent survey (forthcoming), I found that a measure similar to SA-Resistance was significantly positively associated with a self-report measure of password manager adoption, but significantly negatively associated with a measure of being in a pre-adoption stage similar to intention. A research assistant during the 2021 REU program, Faye Kollig, also found no significant variances in resistance among participants in our interview study to identify commonalities in security adoption narratives.

At the same time, adding these resistance items to those measuring concernedness, attentiveness, and engagement (the SA-13 inventory) appears to create a reliable predictor of security behavior. In a study at Fujitsu Ltd., Terada et al. found a correlation between SA-13 and actual security behavior for both Japanese and American participants (p<.001) that was stronger than that for SA-6. The authors speculate that this is because of the inclusion of the resistance items.

Is it consistently the case that resistance only helps to differentiate your level of adoption if it is balanced with other attitudes? Is some other mechanism responsible? I hope to follow up on these results with a student assistant when I join UNC Charlotte’s Department of Software and Information Systems this fall as an assistant professor.

Coincidentally, the New Security Paradigms Workshop has a theme this year of “Resilience and Resistance.” I may submit to the workshop myself, but I also hope that other prospective attendees will find my resistance scale of use in their work.