Our research into this practice sheds light on social cybersecurity.

Walk through a typical research lab – or home kitchen, or business office, or media room, or retail counter. You’re likely to see a piece of paper taped somewhere visible to others, written with a username and password for a WiFi network, an email inbox, or a cloud server account. This is evidence of account sharing, where more than one person uses the same authentication credentials (often, against the stated security policies) to share access to a needed work resource. My latest study of this social practice appears in Proceedings of the ACM: Human Computer Interaction, Vol. 6, CSCW1 (April 2022), and will be presented at CSCW 2022.

Why does account sharing take place?

My group at Carnegie Mellon University, Social Cybersecurity, has found such account sharing to be a “normal and easy” practice in many workplaces and in romantic relationships. Account sharing helps people to get work done and to strengthen their social bonds. But, it also poses many challenges for account security and for the legitimate system users. We found, for instance, that romantic couples don’t use two-factor authentication despite this being a best practice, mostly because it causes issues when one person tries to log in and the other person can’t answer their smartphone notification. We also found that workers reported problems with colleagues who were fired or quit, then changed a shared account password to something unknown, locking them out.

How do people typically share accounts?

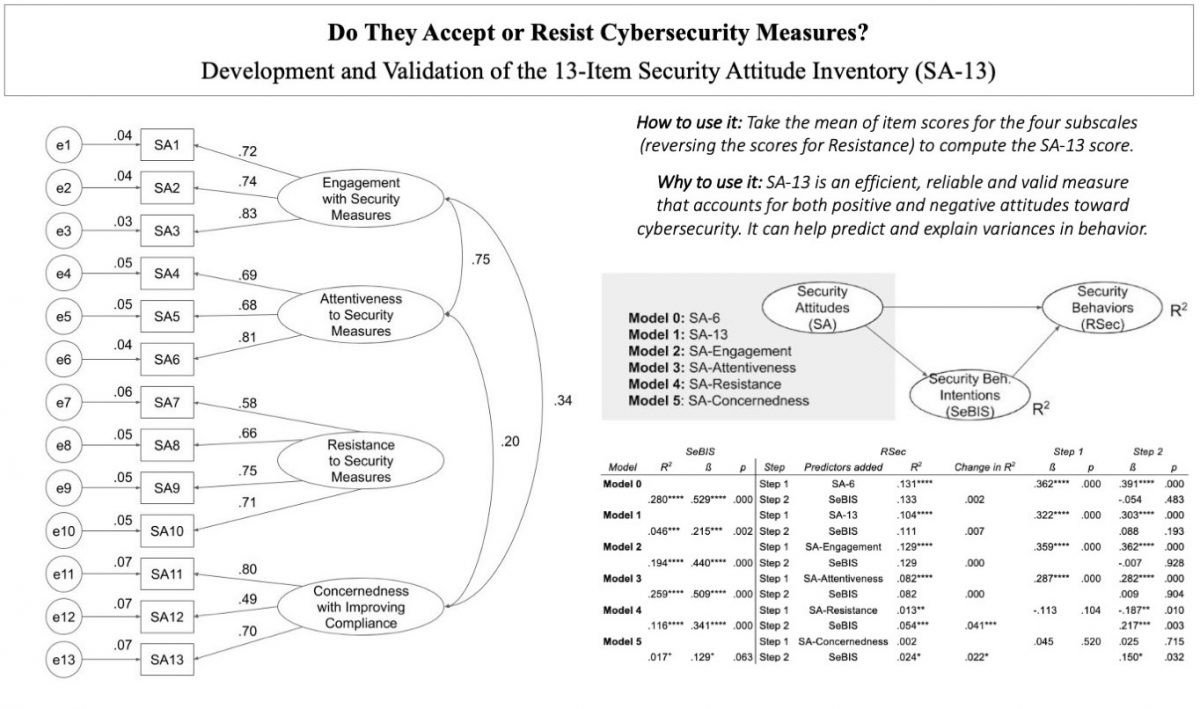

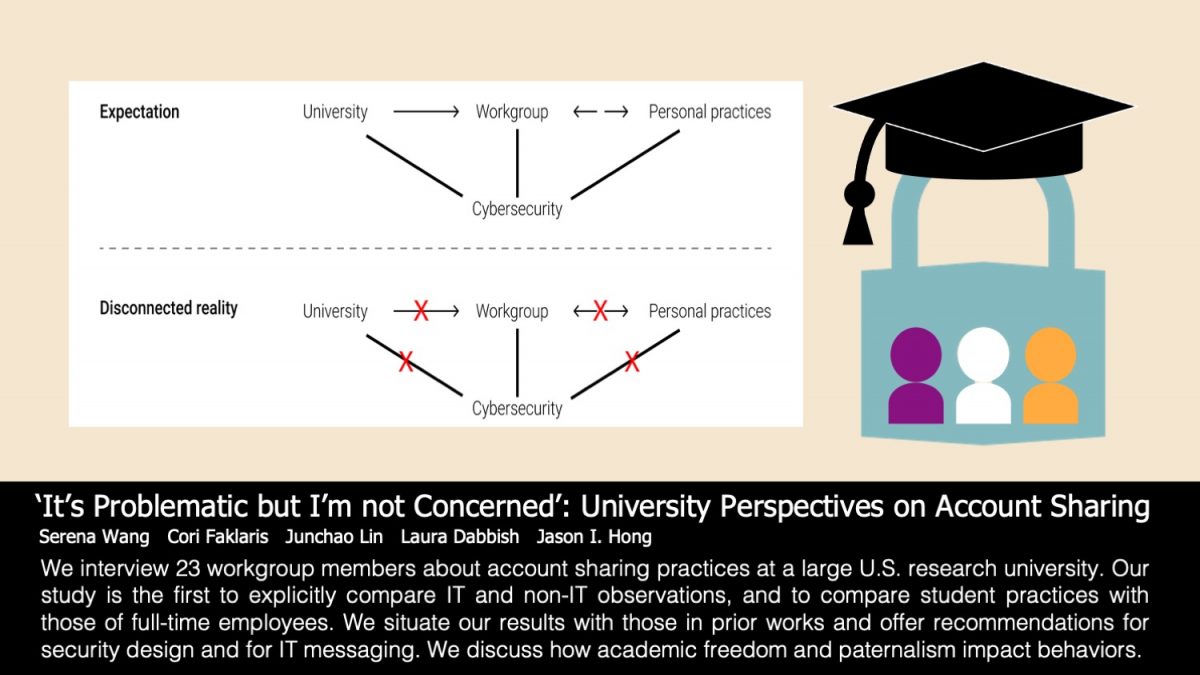

In this latest research, an interview study at a U.S. research university (N=23), we found that 17 participants (74%) reported sharing accounts in three ways. First, they shared them via the unofficial but secure method of an individual password manager (a type of software program that remembers your passwords for you via a secured vault). Second, they shared passwords officially via an Enterprise Random Password Manager (ERPM), a supercharged password manager for IT departments that manage many devices and control remote access. Third, they shared passwords unofficially via ad-hoc methods such as plaintext messages stored in a group chat. As in prior work, many shared accounts were for cloud services (frequently, Amazon or Google) or social media (Instagram and Twitter). Crucially, however, no one in our study reported sharing university email linked to their personal identity. This may speak to the success of this university in educating users about the need to keep this credential confidential.

My co-author, Serena Wang, and I found that non-IT workers and/or staff practices around account sharing were less organized, compared with the practices of IT workers and/or students. We also note the influence of two context-specific norms: educational paternalism, as shown by their stated trust in the campus authorities to keep their data and accounts safe; and academic freedom, which implies no limits on tech use and a corresponding lack of top-down security mandates. We think this makes universities different from other workplaces and shows how social norms are likely to influence people’s behaviors around cybersecurity. For example: a negative influence would be a lessened oversight of anyone doing “enabling” work for researchers such as processing credit-card payments. A positive influence would be the likelihood that system users will comply with requests from the university to use a provided password management system for secure account sharing.

What can be done to change account sharing practices?

My group’s overall recommendation from this and other studies has been for system architects to change the “1-user-1-account” design of authentication systems to allow accounts to be securely shared with multiple people. These changes have been implemented in several systems, such as Microsoft’s Azure Active Directory. We also worked with two undergraduates in the School of Computer Science to design and build out a simple social authentication tool. This tool would let a user log into a research lab account by verifying their answers to questions about what occurred during the last group meeting.

Our next step is to discuss the feasibility of our recommendations for research universities with their real-life information security teams. The need for more attention to cybersecurity in educational institutions, particularly higher education, is urgent. One U.S. university official estimated that their institution received an average of 20 million attacks a day, describing this as “typical for a research university”. Threats facing the education sector include ransomware and stolen credentials attacks with unique characteristics. Verizon reports education now is “the only industry where malware distribution to victims was more common via websites than email,” chalking this up to the many “students on bring-your-own devices connected to shared networks”. Please contact me if you would like to consult with us, or read our paper for details specific to end-user cybersecurity in educational and/or research contexts.

- Serena Wang, Cori Faklaris, Junchao Lin, Laura Dabbish, and Jason I. Hong. 2022. “It’s Problematic but I’m not Concerned”: University Perspectives on Account Sharing. In Proceedings of the ACM on Human-Computer Interaction, Vol. 6, CSCW1, Article 68 (April 2022), 27 pages. https://doi.org/10.1145/3512915